Recently I worked with a client to fix their server, which had been down for hours. He is, in his words, “just technical enough to be dangerous”, which is a sentiment to which I strongly relate.

He shared his screen so that I could give him suggestions on next steps. Every now and then he switched over to a ChatGPT window to get detailed instructions about what he was trying to do. Having never used it this way, I was shocked how effective the technique was. It made me question the value of years of experience solving these sorts of problems. If a large language model (LLM) could answer all his questions, what was he paying me for?

Our big problem was moving files from one server to another. Normally this is trivial, but he couldn’t connect to the broken server except via DigitalOcean’s Recovery Console. He tried adding a public key to allow us to connect via SSH, but the key was corrupted when he tried to paste it in. For some reason the lower case letters became upper case and the other way around.[1] He found a way to change the keyboard settings on the terminal, which fixed things until he tried pasting in the key again. He was in a loop of typing questions into ChatGPT and getting answers that solved our next problem.

And yet . . . we were no closer to a solution. As I watched him try one command after another, I slowly realized he was digging a deeper hole with no promise he were digging in the right spot. Finally I stepped in with a suggestion for a completely new approach: attach a network file system to the failed server, copy the files there and mount the file system on the new server.[2] That solved the problem.

Technology has been making finding answers easier and easier

This isn’t an anti-AI story, though. While my client was using ChatGPT, I was using Google to get similar answers. Frequently my searches turned up Stack Overflow questions, which usually had generally-applicable answers. ChatGPT gives customized answers that match the specific technology in question and even fills in variable names if provided. When your system is down and the pressure is on, it’s certainly handy to have an answer that can be copied and pasted directly without needing to edit anything.

When measuring the effectiveness of Q&A sites, median time-to-first-answer stands out. When I started as a programmer, physical manuals were cutting edge technology. A few years later I got a collection of O’Reilly books on CD ROM, so I could search them for answers. The internet, especially USENET newsgroups, opened up more ways to get answers. The Google search engine sped up problem solving by providing relevant answers on the first page of results.. It’s starting to look like ChatGPT has revolutionized our access to information yet again. In the past, it might take days to get the answer that now comes seconds after expressing a wish for it.

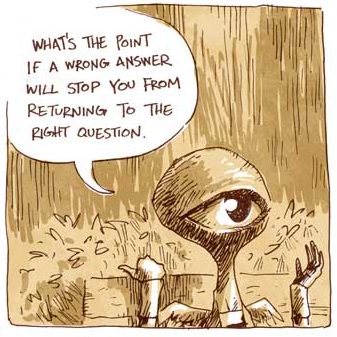

What’s wrong with that?

Always having an answer means never reconsidering the question

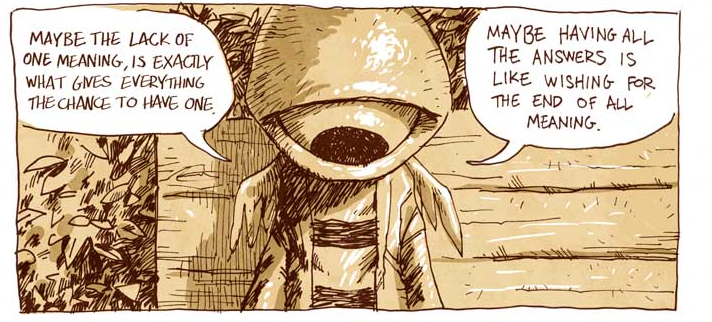

LLMs provide an infinite supply of answers via clever programming. Google always gives answers too,[3] but since it doesn’t (and can’t) change the sites it indexes, it’s not persuading you that answers exist where they ain’t. Many people are comforted by believing they have answer to important questions, but the world isn’t solved just yet.

That’s pretty comforting too, if you think about it.

I’ve drawn a lot of my thinking (and taken frames) from a comic called “A Day in the Park”. It was created by Kostis Kiriakakis. Recently he wrote a comic about AI and plagiarism and corporate greed. Well worth reading!

After solving my client’s problem, I looked into the issue we were having with DigitalOcean’s recovery console. I haven’t tracked it down completely, but I did find evidence that what we experienced isn’t unique to us: Droplet Console - Paste Text is Inverting Case My solution was to create a new volume, copy

/var/discourseto the volume and reattach it to the new Droplet. ↩︎I recalled this option from reading MKJ’s Opinionated Discourse Deployment Configuration ↩︎

Lately I’ve notice Google giving results for queries that ought to show results because no page has used the string. For example, “Spat lack crate late” doesn’t show up in the internet until I post this article. But Google gamely shows me YouTube videos and pages about late model dirt racing, for some reason. I suspect Google is working to replicate AI in this and it’s not a good idea. ↩︎